Choosing the Right Product Prioritization Framework for Your Business

Product managers have many things to consider when choosing a prioritization framework. Learn about the top techniques and pros and cons of each.

Product managers have the lofty task of determining how to gather feedback from teams and prioritize the design and development accordingly. Several different product prioritization frameworks exist to help evaluate tasks, features, and updates and prioritize them according to various goals. But how do you decide which prioritization framework is best for your organization or next project? In this article, I’d like to arm you with some information about the options so you can decide.

Top Prioritization Frameworks for Product Managers

Let’s first dive into the type of product prioritization frameworks available. There are many frameworks to consider, but these are the most popular used:

RICE

KANO

MoSCow

Opportunity Scoring

Value vs. Effort (or Complexity)

Story Mapping

Weighted Scoring

RICE

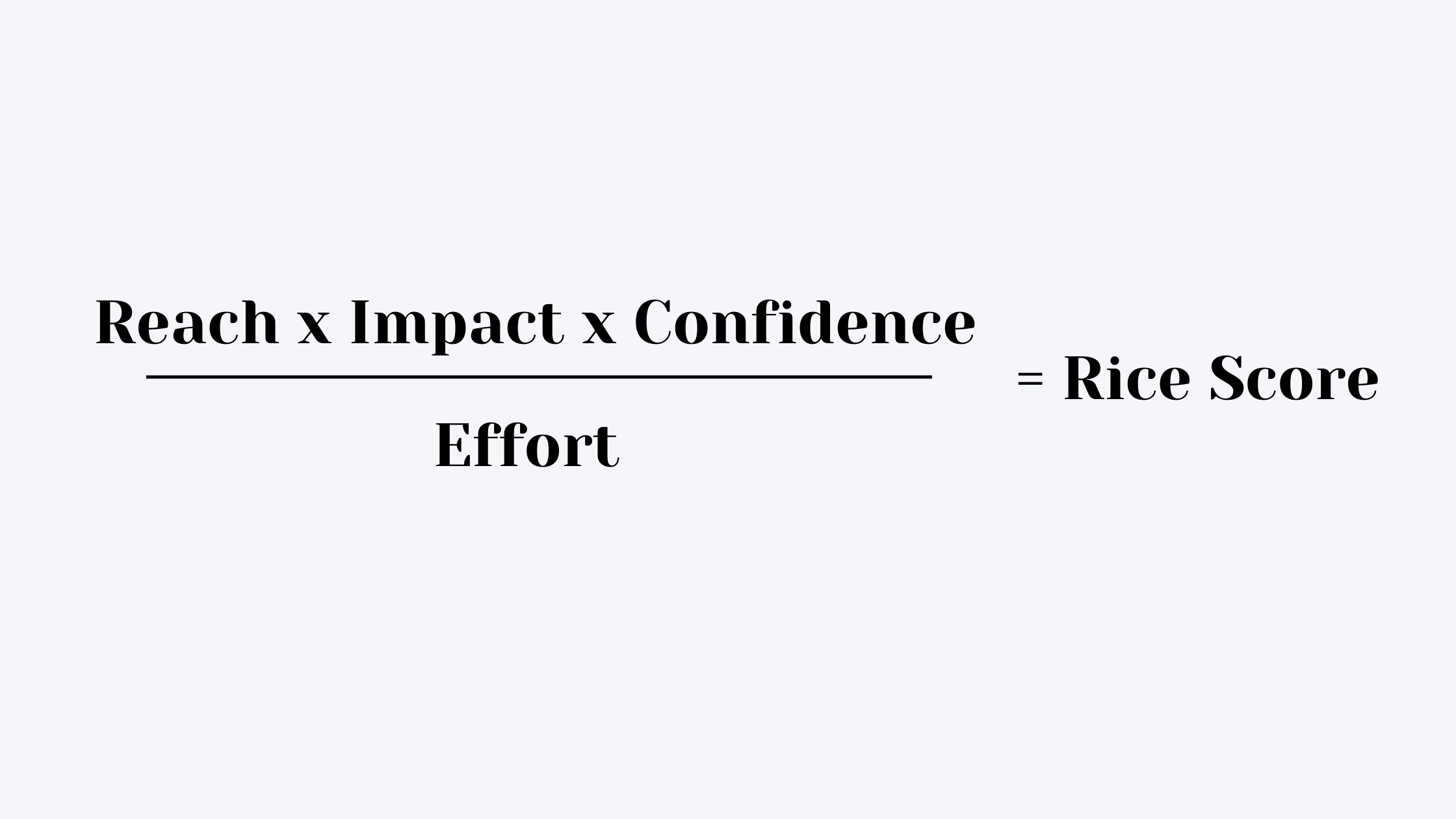

RICE stands for reach, impact, confidence, and effort. This scoring system measures each feature or update by each of the four metrics.

Reach measures the number of people or users the feature will impact. This should be the number of users who use that feature during a given time period, such as per month or per quarter.

Impact measures how much the feature or update will impact users. Use a multiple-choice scale to measure from minimal impact to massive impact, such as .25 for minimal to no impact to 3 for high impact.

Confidence measures how confident you are about the impact and reach values. If you have actual data that you trust, your confidence is likely high, whereas, if you are guessing at the reach and impact without actual data, your confidence will be low. This should use a percentage from 0% for no confidence to 100% for high confidence.

Effort measures the amount of time it will take to design, develop, test, and launch the feature. This number should include the total months for all team members involved from start to finish.

After these individual values are determined, the following formula is used to calculate the RICE score for each feature.

The higher the RICE score, the higher the impact of the feature and lower the effort to complete that feature or update.

KANO

The Kano model measures features along a matrix to plot satisfaction of a feature and its functionality.

Satisfaction measures how delighted or excited users are about a feature along the Y-axis. The scale starts on the bottom with Total Dissatisfaction to the top with Total Satisfaction.

On the X-axis, we measure functionality, which shows how well a feature functions or whether a particular need was met. Each feature is plotted from None on the left if it isn’t functional at all to Best on the right if it performs the tasks at hand extremely well.

Each feature is plotted on a matrix to show how well a feature functions and whether customers or users are satisfied by it. This helps to identify if investing in certain features to improve functionality actually makes happy customers. It also helps to identify the basic features that are meeting user needs but don’t excite them about the product compared to the features that excite users and whether they are fully providing what they need or not.

MoSCow

The MoSCow method is used to identify which features are important and which ones are not. In the MoSCow technique, features are placed into four categories (which make up the acronym) — must have, should have, could have, and won’t have.

Must have features are non-negotiable. They may be the flagship features that are required for functionality or for legal or safety reasons. If you identify a feature as must-have, it means you are unable to launch the product without it.

Should have features are the next level of importance. They are important features to users, but the product would still function without them.

Could have features are nice to include. They are less important features than others and could add some value if included, but they won’t make a large impact on whether users are satisfied with the product. If time or budget doesn’t allow, these features could get moved to a later release.

Won’t have features are not important and are out of scope or won’t add any value to the product. These features will either be considered later (possibly with adjustments) or won’t be considered at all.

The MoSCow method is a simple categorization technique, so no math is required nor numerical value applied. The features are simply added to the prioritization categories from must have to won’t have to help decide which features to include in a product release.

Opportunity Scoring

Opportunity Scoring is a prioritization framework that plots the importance and satisfaction of a feature.

Users are asked to rank each feature from a scale of 1 to 10 on:

How important is this feature or outcome to you?

How satisfied are you with the existing feature or solution?

Then the answers are plotted along a matrix to see which features are the most important and whether the current product satisfies the customer’s needs. This scoring model helps to identify features that are most important to customers but that could benefit from improvement. It may also show insight into features that you may have thought were important to invest in but that users don’t really find much value in.

Value vs. Effort

Value vs. Effort or sometimes Value vs. Complexity is used to identify how valuable a feature is compared to the amount of work needed to implement the feature. Value and effort are scores for each feature and then plotted on a matrix.

Value is the benefit the feature has to your customers or to your company. This can include the potential revenue the feature can bring in as well as how this feature will meet the needs of its users.

Effort takes into account the amount of time, effort, and cost that will be involved in creating the feature. This includes planning, design, development, testing, and additional operational efforts. It also measures the amount of risk and complexity involved in creating the feature.

Once these two metrics are scored, features can be plotted on a prioritization matrix to easily see which have the most value compared to how little effort they will take to implement. If time or budget is a factor, this method can help to identify the most important features to focus on in a short amount of time.

Story Mapping

Story Mapping is used to visually tell the stories and feedback of your customers or users. It places the importance of features on the user’s experience with them. In a Story Map, categories are displayed at the top of each column to show the customer journey and all the steps that could be involved for each customer. This product prioritization technique helps to visualize each step a user takes within a product and how those features align with those steps.

After the categories are laid out, each task or feature is prioritized from top to bottom to display the order you will work on or release them. It helps to see what stages the feature releases will affect as then help to evenly prioritize feature releases throughout different stages of the customer journey.

Weighted Scoring

Lastly, the Weighted Scoring prioritization framework is used to help you determine which features should be included and when. The following steps are taken to create weighted scores.

Metrics or categories of scoring are identified to show how you will score each feature. These may include customer satisfaction, revenue potential, cost, user experience, etc.

A weight is then given to each metric. This is a percentage of how important that metric is to the company or the product. The total of all weights will add up to 100%.

Then features are listed and scored from 1 to 100 on how they meet each of the metrics listed. These scores should identify how well that feature meets a given metric.

Once those scores are completed, the scores in each box are multiplied by the weight identified in the second step. So multiply the number that feature scored for the metric by the weight of that metric. Once each metric has a number for that feature, they are added up to get a total.

The higher the number calculated for each feature, the higher priority that feature should have.

Pros and Cons of Each Product Prioritization Framework

Now that we’ve reviewed each of the common prioritization techniques, let’s consider the pros and cons of each.

RICE

Pros

Creates an objective score, which can be used to easily prioritize features.

Considers four important metrics to give a more complete score for each feature that uses both user data with business metrics.

Cons

Although confidence is part of the score, if limited data is available the scores may be arbitrary.

KANO

Pros

Simple method using only two metrics — satisfaction and functionality.

Can easily see which features function well compared to how much a user is satisfied with it. This helps to see if investing in certain features matters to your users.

Places importance on user experience and feedback.

Cons

Scoring may be arbitrary to the users, which can affect outcomes.

It doesn’t take into account operational or business metrics such as cost, time, revenue potential, etc.

MoSCow

Pros

Easy method to identify features that are the most important to keep in a release.

No calculations are required.

Cons

It doesn’t take into account operational or business metrics such as cost, time, revenue potential, etc.

Teams may place a large number of features in must haves. Then there’s no further value to help prioritize those “must have” features.

Opportunity Scoring

Pros

Simple scoring method using two metrics — satisfaction and importance.

Can be visualized on a matrix to easily see where each feature falls on the satisfaction and importance scale.

Easily identify opportunities for features that are important to customers but that you do not currently meet their needs.

Cons

Scoring is arbitrary to the user so different users might score differently even if they feel the same way as another about the feature.

It doesn’t take into account operational or business metrics such as cost, time, revenue potential, etc.

Value vs. Effort

Pros

An objective numerical value is determined, which helps to easily prioritize features.

Two metric scoring is simple and easy to measure.

Flexible—value and effort can be determined by each organization differently.

Cons

While the flexibility of value and effort is a pro, it could be come a con if scorers do not align on a relative scoring system.

Scores can be biased and aren’t making decisions based on hard data.

Large teams might find it hard to come to a full consensus.

Story Mapping

Pros

Looks at the customer journey and user experience to prioritize features and ensure each stage is being considered.

Helps to easily identify sprints for product releases.

Allows you to easily see the top priority features.

Involves the whole team’s input to map each feature.

Cons

Only considers the user experience and doesn’t measure cost, effort, revenue potential, etc.

The whole team will have to come to a consensus, which can be difficult to achieve.

There is no score calculated to easily identify the winners.

Weighted Scoring

Pros

Places more weight on how well the feature meets the metrics that are more important, instead of giving equal value to all.

An objective numerical value is figured, which helps to easily prioritize each feature.

Cons

Team members may not agree on the weight of each metric.

Which Prioritization Framework Should You Use?

Determining which prioritization technique a product manager should use will be determined by several factors. Some questions to consider are:

Do you need to consider user experience, business objectives, or both?

Is budget and time important to consider?

Are you choosing a framework for a new product or an update of an existing one?

How many features are you considering?

How many users or internal team members will be involved in the evaluation process?

For each of the prioritization frameworks, we’ll dive into situations that work best to use them.

RICE

The RICE method is best used when you have SMART (specific, measurable, attainable, relevant and timely) metrics. If you are looking for an objective scoring method that looks at a variety of user and business metrics, RICE may be a good method to use. Although revenue isn’t used explicitly, it can be factored in as part of reach and impact.

If you don’t have a lot of data, the RICE method may result in arbitrary and biased scores. It also doesn’t take into account the cost of technology other than to factor in time. So if you need to consider hiring specialized developers, this technique won’t factor that in. Additionally, although user metrics are used, it doesn’t consider actual user feedback.

Kano

The Kano model is often used when you want to help show how much a feature actually matters to customers and if it needs improvement. It can help to prioritize feature updates that are important to users but that could be improved upon.

Since Kano provides only user feedback, this model is not helpful in determining prioritization based on time, budget, or revenue potential.

MoSCoW

The MoSCoW method is best used to prioritize the features based on what will make the product function or stay in compliance. When importance of the feature to the user or business is the only factor that’s important, this method can be used to easily used to identify them. The MoSCoW method is also good for input from team members with a non-technical background.

If you have an optimistic team who thinks everything is a must have or should have feature, this method may not help you narrow down what to add to a release. It also doesn’t consider time and cost, so if those are important, you may not want to use the MoSCoW method.

Opportunity Scoring

Opportunity scoring is helpful when you want to understand the user’s point of view in a simple way. If you want to understand what the best opportunities to make your customers more satisfied with your product are, this method will help you identify those areas for improvement.

On the other hand, since opportunity scoring only considers user feedback, other business objectives are not included. If budget, time, or revenue potential are important to factor in, this model will not provide the data you need.

Value vs. Effort

If you’re looking for a simple model that will help identify quick wins, important features that may take more time, and to identify high effort, low value features, the Value vs. Effort prioritization method may be for you. This method may also be useful for new products where you don’t have user feedback to utilize.

If you have a long list of features, this method may not be the best option to choose. Other methods will also be better at incorporating user experience than this model.

Story Mapping

Story Mapping is great when you want to work as a team to quickly map features. If the customer journey and having a full set of features for each stage is important, this method will help to understand how features fit within the journey and prioritize them accordingly. When user experience is at the center of your objectives, Story Mapping can help to prioritize features that will meet the needs of users all along their journey.

On the flip side, if revenue, time, and budget need to be factored in, Story Mapping on its own won’t be helpful. Also, this method is best used when all team members can get together to map the story together. So, if a large team is involved or is not able to meet at once, you likely won’t get the results you are looking for.

Weighted Scoring

Weighted scoring is helpful when some metrics are more important than others and you don’t want to consider all metrics equally. Since a score is calculated, prioritization can easily be determined, which can help if you want to cut off a release at only a certain number of features. This is especially important when you have a large number of features that you are considering.

This is a more complex model though, which may be too complicated than some product managers need. If there are team members from multiple job functions that might weight some metrics higher than others, it may be hard to come to an agreement on the weighted percentages.

What Prioritization Framework Does Meticular Prefer to Use?

With our focus on UX Design, at Meticular we have incorporated story mapping into our Microsprints. That being said, we also combine some aspects of other frameworks to ensure we are meeting business goals. Rarely do we storymap a set of features without having a discussion around cost and effort. Often times we break down features into phases since most clients are anxious to get an MVP version into the wild. My advice to you is that just because the frameworks are well defined, as we’ve discussed above, it doesn’t mean you can’t modify them slightly to better meet your needs.

After considering the top product prioritization frameworks, what will you use for your next product release? Still unsure what method is best for you, Meticular can help guide you to choose the best model for your project. Contact us to see how we can help bring your product to life.